Key tools, technologies, and terms

The technologies and tools supporting GenAI's developments are moving fast. Here's an overview of the technologies, terms, and principles AI developers need to know.

Python

Python remains the primary programming language for machine learning. It doesn't need compilation to test changes, making it the perfect tool for data scientists who may not have expert programming skills and want to run AI experiments. As Python has been around since the 1990s, an ecosystem has arisen around it. Although not everything can be written in Python, it wraps nicely around other faster languages like C.

Hardware accelerators

Hardware accelerators are essential for processing complex AI computations. They grew out of 3D graphics, which calculate multiple points in space and light sources to render an image. Accelerators found new life in machine learning and AI, which need to calculate thousands of weights and biases in parallel.For decades, the primary computing engine of most computers has been the central processing unit (CPU). This is a general-purpose serial computing unit that handles several operations concurrently and uses a memory cache to store interim computations. Hardware accelerators like GPUs (graphics processing units) and TPUs (tensor processing units) can process thousands of computations in parallel.By June 2024, Nvidia owned 88% of the GPU market. This is beneficial for consolidating standards, but poses a risk of a single point of failure with one dominant player. But in January 2025, they lost $600 billion in valuation as DeepSeek unveiled their R1 model, sparking concerns about the entry of cheaper Chinese tech eliminating the need for expensive, high-end GPU servers.

Neural networks

Neural networks are the basis for most GenAI models. There are several different types that you'll encounter when considering and implementing GenAI.

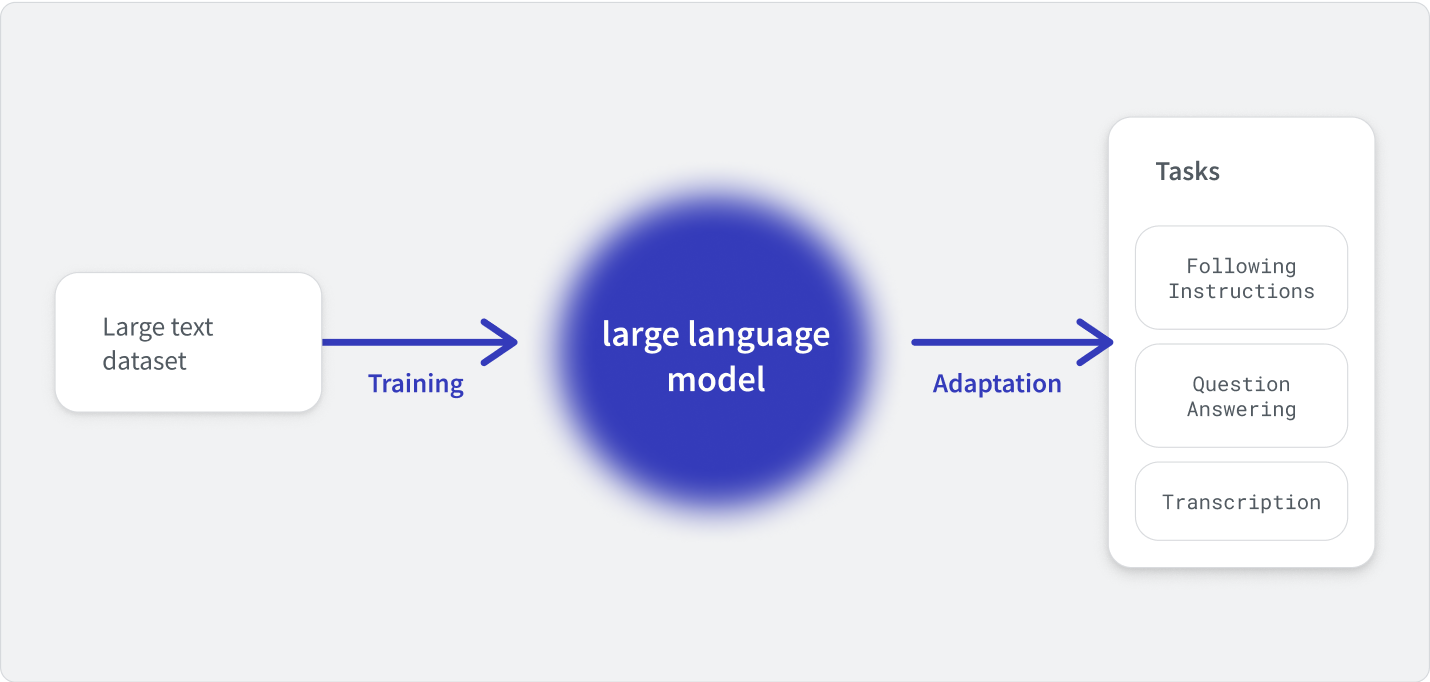

Large language models (LLMs)

LLMs use machine learning to understand and generate language. They’ve advanced significantly in recent years, with models like OpenAI's GPT-4 supporting multimodal interactions, including text and image analysis.

Generative adversarial networks (GANs) and synthetic data generation

GANs are widely used for creating synthetic data generation, particularly in image and video synthesis. Artificial data is used to train AI models, enhancing privacy and diversity. In 2023, Gartner predicted that by 2024, 60% of data used in AI and analytics projects would be synthetically generated.

Variational auto-encoders (VAEs)

VAEs generate new data across various domains, including music and art.

Transformer-based LLMs

Transformer LLM models speed up natural language processing (NLP) tasks and are customizable for specific domains.

Multimodal models

Multimodal LLMs handle data across text, image, and audio. They’ve seen wider adoption thanks to mass market tools like Google's Gemini and Microsoft's Copilot giving easy access to text and visual creation inside one tool. Gartner predicts that 40% of GenAI solutions will be multimodal by 2027.

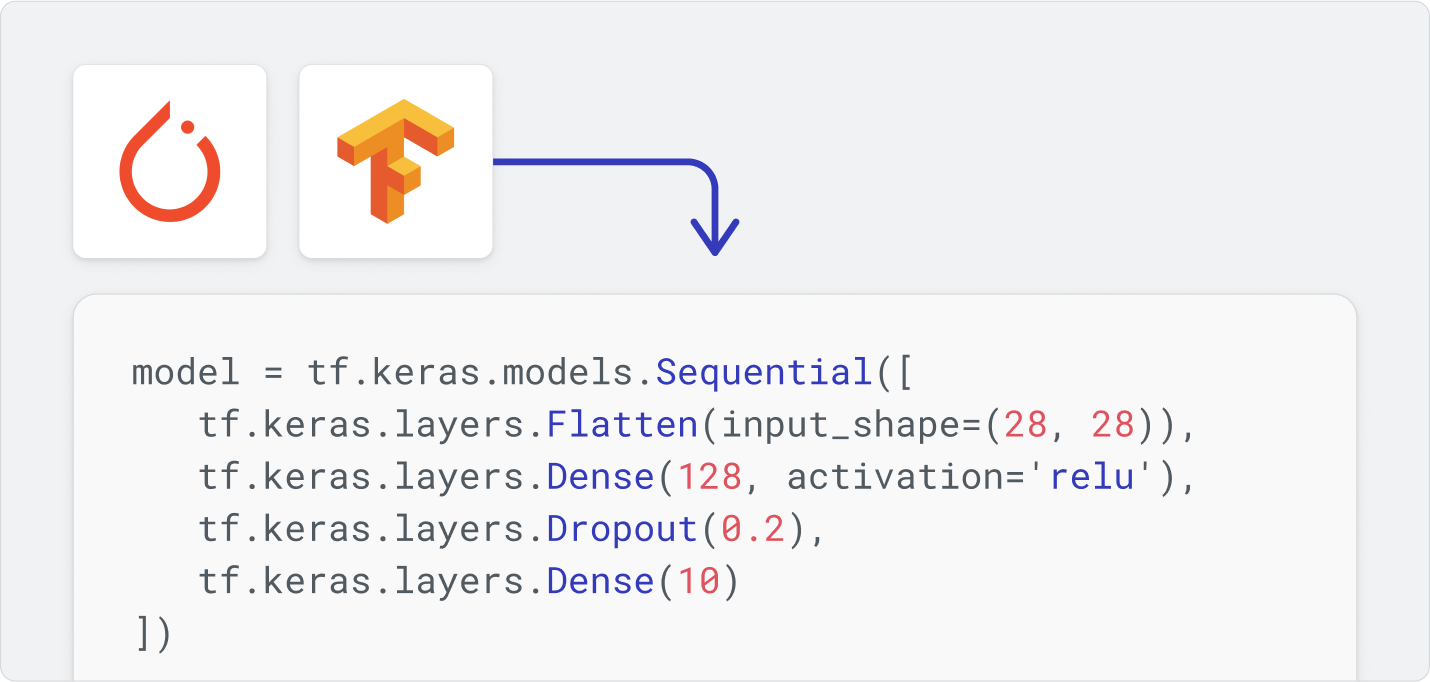

Machine learning frameworks

The complex math used by ML models can be complex for developers to implement. Open-source Python libraries like PyTorch and TensorFlow make training and fine-tuning models more accessible and standardized.

Data lakehouses

GenAI relies on large amounts of data for training, fine-tuning, and semantic search. This data is often stored in data lakehouses, which combine the structured reliability and low latency of data warehouses with the cost efficiency of a data lake. AI processes can access business intelligence and analytics data, enabling more relevant insights from AI systems.

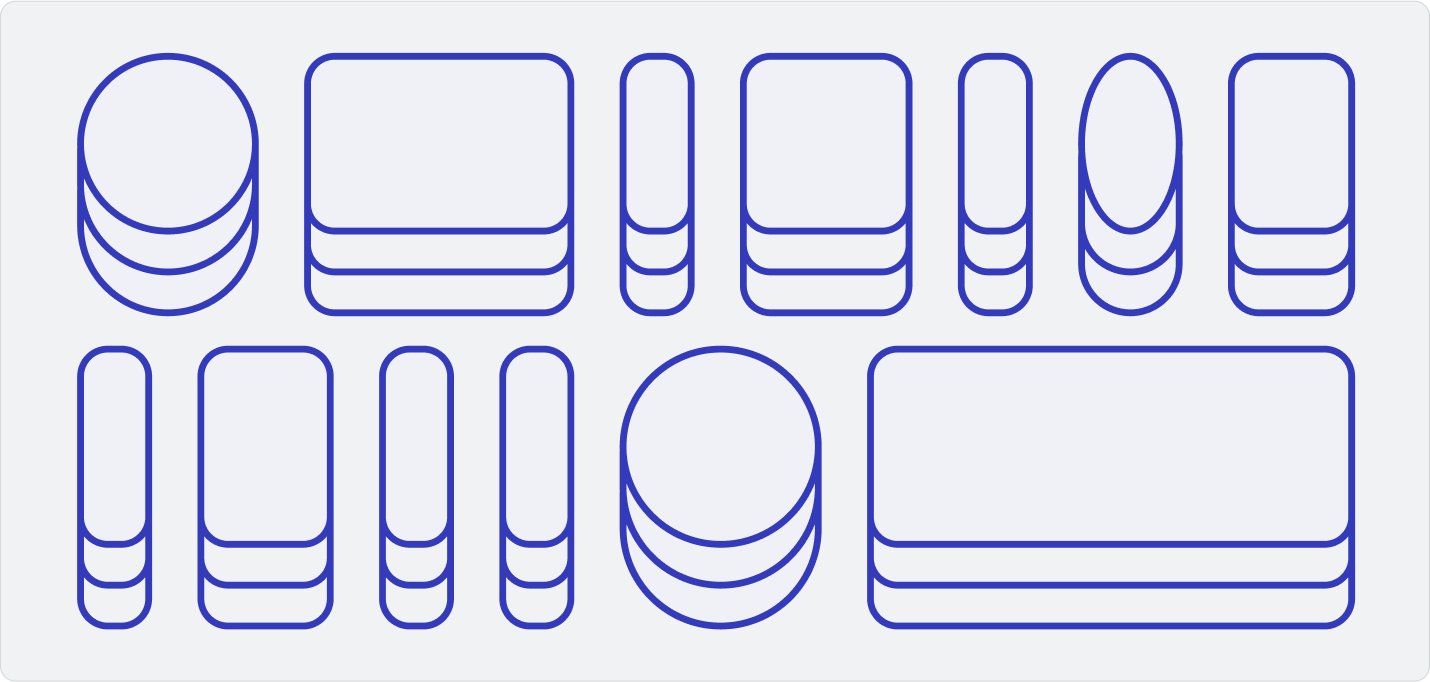

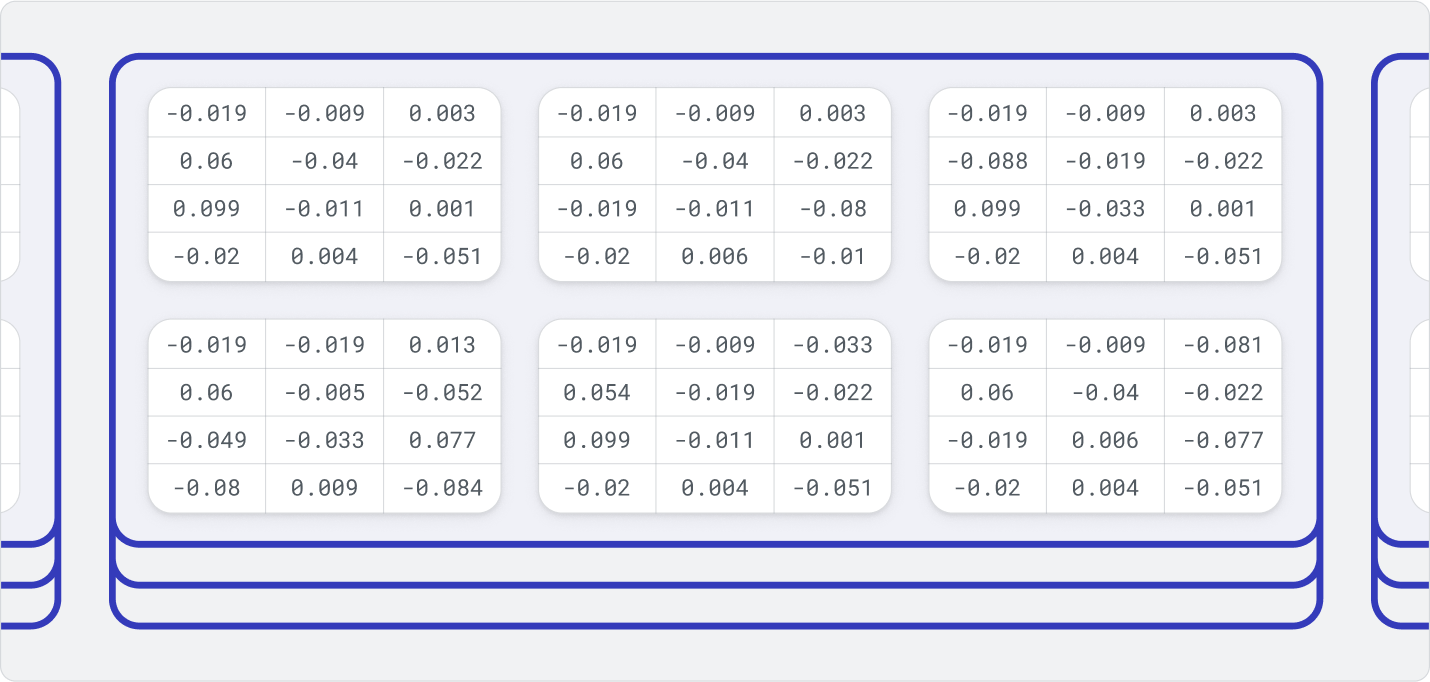

Vector databases

LLMs convert text into numerical patterns, like coordinates on a map, to represent language in a structured way. Vector databases store these patterns efficiently, even with thousands of data points, and make it easy to search and compare them quickly. Vector databases store high-dimensional vectors for AI applications. They’re crucial for retrieval-augmented generation (RAG) and semantic search.

Cloud and edge AI

Deploying AI models on edge devices enables real-time processing with reduced latency, enhanced data privacy, and reduced dependence on network connectivity. Cloud AI involves centralizing the processing of data on remote cloud servers. Developers can access advanced tools without investing heavily in development or hardware, using on-demand computing resources instead of physical infrastructure.

Federated learning

Federated learning is a decentralized machine learning method where multiple devices train an AI model without sharing data with a central server. Each device trains a local model using its data and sends updates to the cloud for refinement, enhancing privacy and reducing data transfer by up to 90%. This method can be used to analyze user behavior while maintaining data security. TensorFlow Federated (TFF) is one prominent tool in this space.

AI model principles

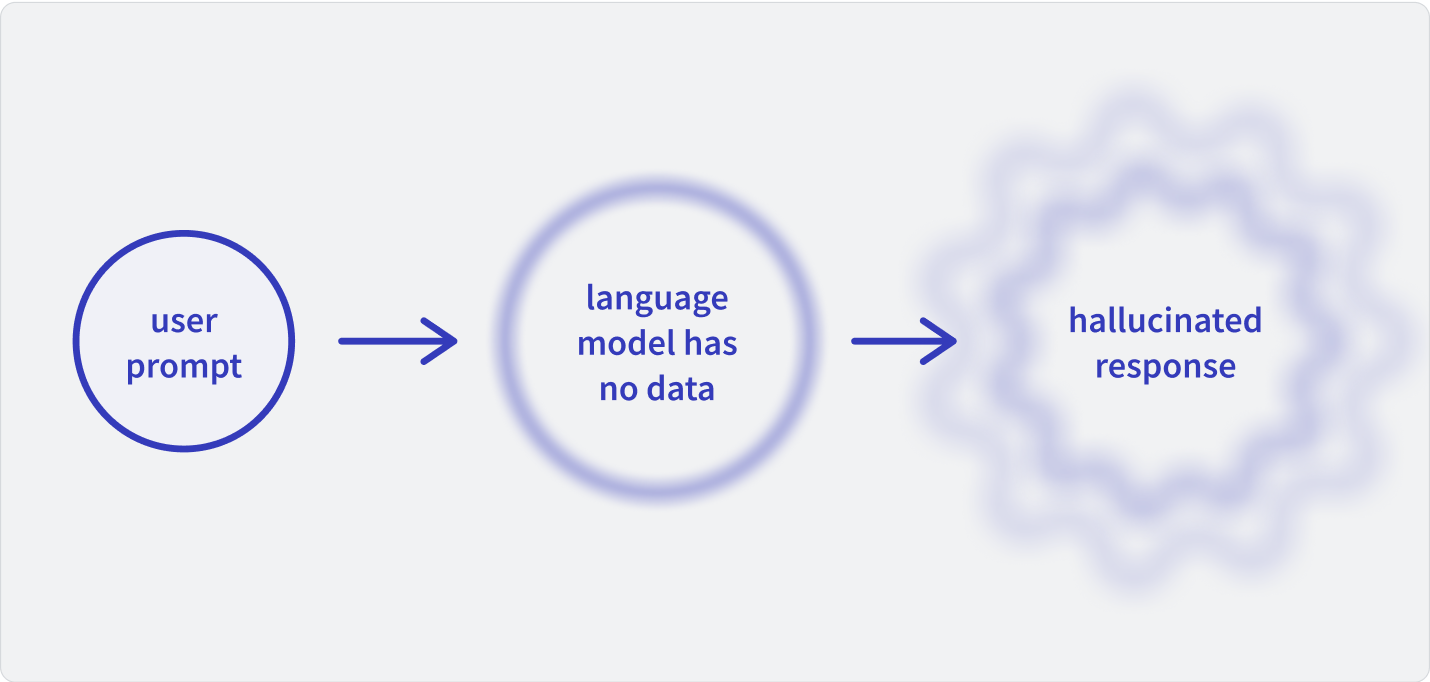

Hallucinations

Hallucinations are instances when AI models generate plausible-sounding but incorrect information. The bad news: AI researchers believe hallucinations are a feature rather than a bug in LLM tools, as LLMs aren’t drawing down existing knowledge but are programmed to come up with plausible-sounding responses. Addressing hallucinations remains a priority for developing more reliable AI models. Retrieval-augmentation generation (RAG), which verifies information against specified data sources, can counteract hallucinations.

Model drift

Model drift happens when an AI model's performance degrades due to changes in the underlying data patterns. Drift impacts the accuracy of AI results. Improving drift is crucial for supporting responsible AI practices, which is a requirement of new regulations like the EU AI Act, which affects organisations developing systems or using the output of AI systems in the EU.

Model drift can be reduced with:

- Fine-tuning: Updating a pre-trained model with new data to improve its performance.

- Explainability: The degree to which the internal workings of an AI system can be explained in human terms.

- Debiasing: Techniques aimed at reducing bias in AI models to ensure fairness.