Building your GenAI tech stack

For some companies, access to an AI-powered chatbot or code generator will be enough. These are widely available as a service or via an API. For those looking to get a deeper integration, however, understanding the technologies used by AI and how they fit into your existing tech stack is essential. The bedrock of GenAI is the base foundation model. But which one should you use?

Build and train your own foundation model in-house

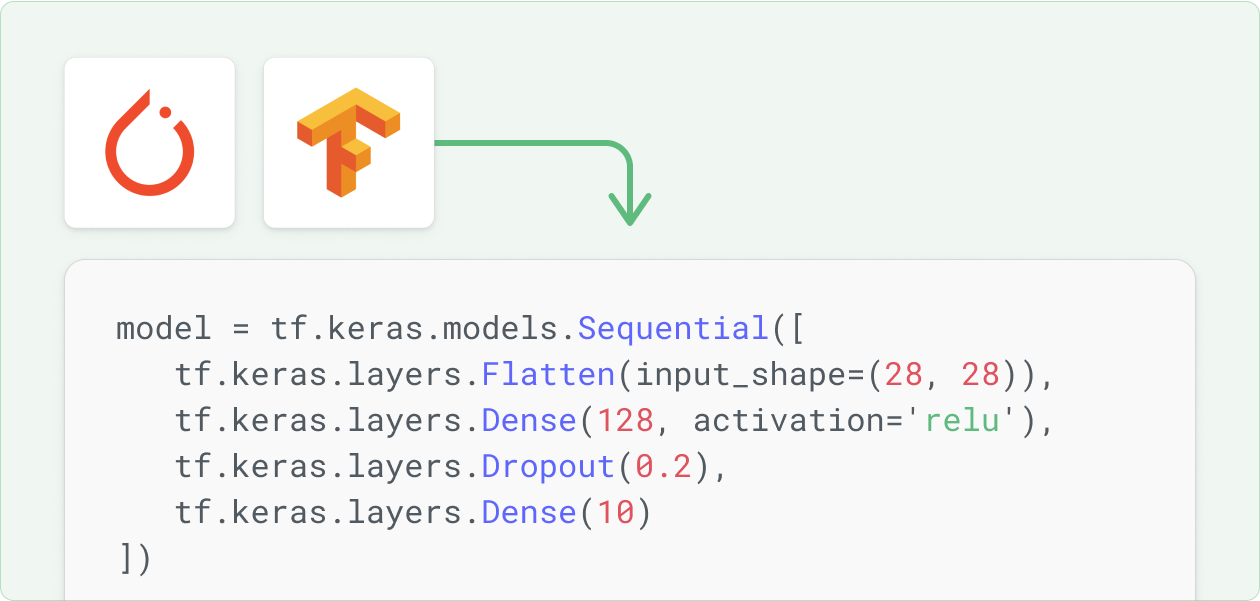

This is the most challenging route and should only be attempted by companies with significant resources and existing expertise among your tech leads. It will require specialized hardware—GPUs and TPUs—both of which are difficult and expensive to obtain (as of publication). You will need engineers who can work with Python frameworks like Tensor Flow or PyTorch to build the neural network and its training environment. The talent, the hardware, and the training all add up to a serious expense.

Work with a third-party vendor to create your own model

A growing number of providers are making this a more realistic and affordable choice. As this field becomes more sophisticated, it becomes easier to estimate the optimal size and cost for your specific requirements.

Utilize an open-source model

This is the simplest approach and the best choice for those with smaller teams who are just getting started. Communities like Hugging Face are a great place to get started if you want to learn about what's available in the world of open-source AI.

Components of GenAI

GenAI leverages additional advanced technologies that require thoughtful integration into your existing systems. Understanding these components is crucial for effective implementation:

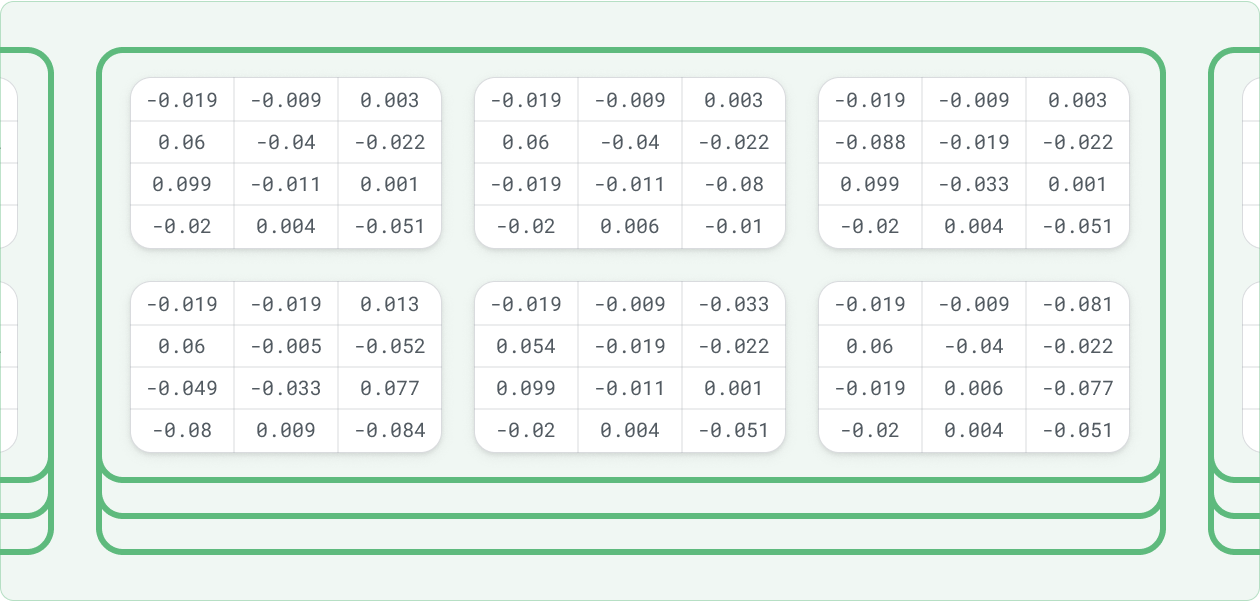

Vector storage, a database that stores the vectors output by the embedding model.

Other storage, possibly a data lakehouse or other unstructured object storage.

A machine-learning framework like PyTorch, Keras, or TensorFlow. Python is the primary language for these, but there are frameworks for other languages.

Access to GPUs or TPUs for accelerated inference and model training.

Where to start?

It can be intimidating to consider where to start with these technologies, as research and development is advancing rapidly right now. Multiple organizations offer access to LLMs via APIs, and open-source models proliferate on sites like Hugging Face, so finding which one fits your organization can be daunting. You might even want to train your own model if your needs are specific enough. But a wide variety of AI—including foundation models—are available as services. For the rest of the tech, you’ll likely need them, but specifically which ones and their requirements will depend on what you’re doing with GenAI.

Fit the tech around the use case

Before diving into selecting tech, buying infrastructure and cloud compute, and integrating GenAI tech into your codebase, you’ll need to think about your use case:

- Do you want the AI to serve as a general interface to your app or do you want to have it access specialized or proprietary data?

- Do you need to expose AI responses to users or will this be an entirely internal tool?

- Are you looking to automate or interact with other processes in your application or organization?

Custom data will require a data platform with vector storage, embedding models, and, if you want retrieval-augmented generation, an orchestration library like LlamaIndex. Responses sent to the user may need additional governance or prompt engineering to manage potential hallucinations, unintentional biases, or prompt injections, as well as an inferencing stack. This often involves building a hidden layer that provides the LLM with base instructions and rules for how to handle and respond to prompts. Automated prompting and prompt handling may require fine-tuning an LLM on GPUs to ensure that the responses fit the intended systems.

With any of these use cases (and more), it’s important that everyone in the organization be on the same page. GenAI integrations can be large, expensive projects that touch a lot of teams, so having a plan about the tech before diving in can make the eventual execution much easier.