How GenAI evolved in 2024

AI hype reached a fever pitch in 2024, fueled by mega investments in technology and a surge in product licenses. PwC projects global AI investment will hit $15.7 trillion by 2030, growing at 38% annually. OpenAI’s valuation soared to $150 billion after securing the largest venture capital investment round in history. These investments underpin the potential for AI but raised questions about long-term profitability and growth, especially as training frontier models becomes increasingly costly.

For business users, AI is finally starting to bear fruit: 73% of companies already use AI in at least one business area. Startups are hacking different AI tools for automation, while enterprises use both bespoke and off-the-shelf solutions for wide-ranging tasks from personalizing sales emails to supply chain optimization.

LLMs went mainstream

For mature adopters, large language models (LLMs) transitioned last year from pilot programs to establishing generative AI (GenAI) tools in many workflows. OpenAI’s ChatGPT, Microsoft’s Copilot, and Anthropic’s Claude vied for attention to become your LLM of choice. AI companies hype each release as a step change, like OpenAI’s 12 Days of Ship-mas in December 2024 launching new products and features including Sora, an advanced video generation tool.

More than just summarizing your scrum actions, AI solutions and the rising demand for compute power is driving foundational model advancements in life-enhancing fields like biology, genomics, and neuroscience. Expanded AI models have bolstered cybersecurity with enhanced attack detection and real-time network protection. Edge AI development and reduced reliance on the cloud are improving latency for IoT and mobile applications.While some early users are reaping rewards, many businesses are still in the cautious adoption stage, not yet achieving the promised gains in productivity. Gartner reported that less than 4% of IT leaders find Microsoft Copilot valuable. With inadequate data access protocols, the tool internally leaked sensitive company data like salaries and HR files. Successful organizations need to have a defined plan for adoption and integration with IT and security systems. They must avoid the allure of falling for “shiny object syndrome” with each new and improved AI model launch.

CodeGen tools streamline development

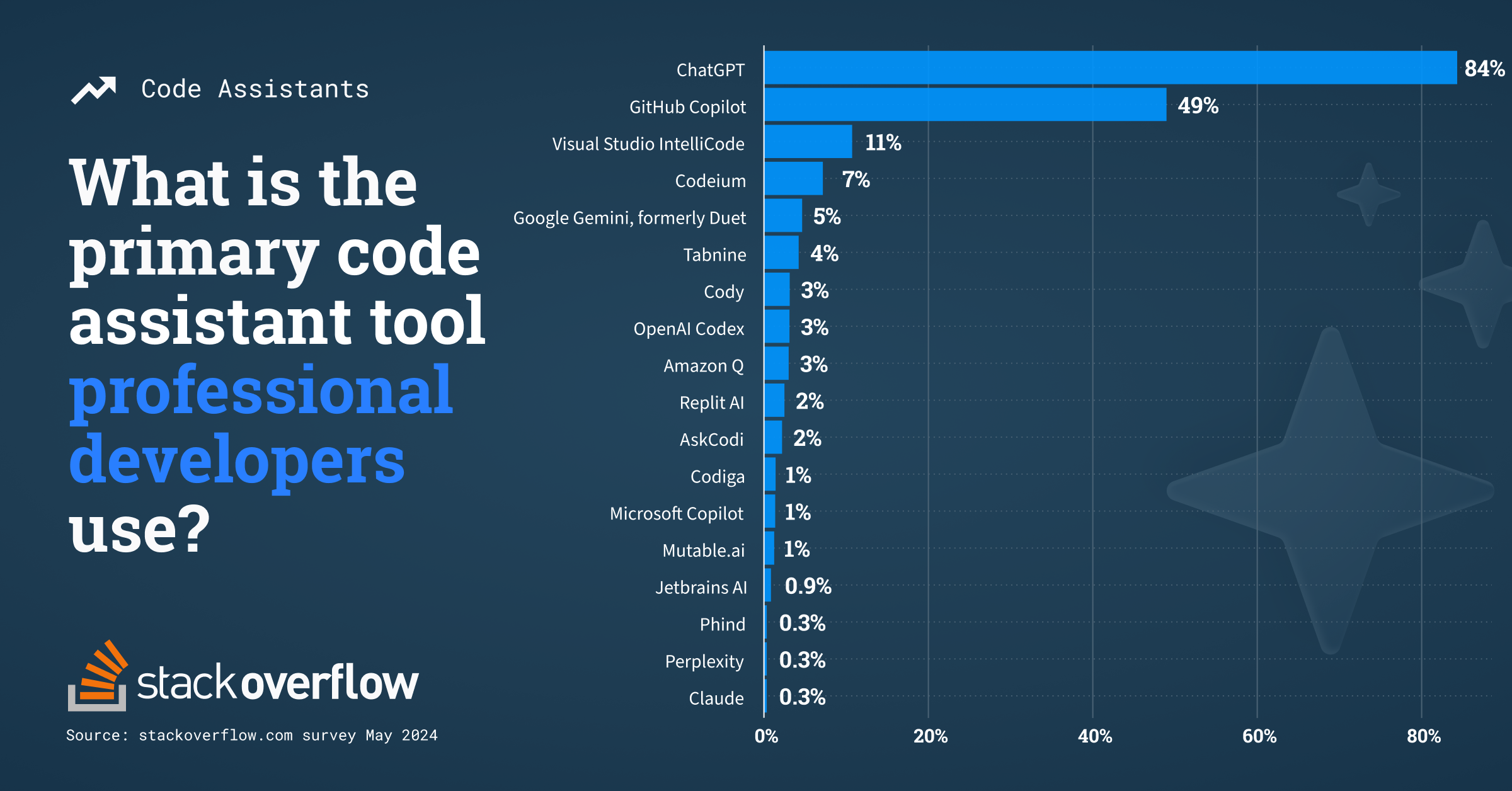

AI code generation tools are rapidly gaining traction among developers. They’re becoming more advanced allowing for real-time code generation, automated bug detection, and performance optimization.

The AI code tools market is projected to triple in value to $12.6 billion by 2028, by which time Gartner predicts that three in four enterprise software engineers will use AI code assistants, up from one in ten in 2023. Three in four (76%) of our survey respondents currently use or are planning to use code assistants. Google is leading this adoption, announcing in its Q3 earnings call that over 25% of their new code is now generated by AI.

Expanding context window sizes—now reaching up to one million tokens—can process vast amounts of code or documents at once, streamlining tasks like large-scale code refactoring and summarizing documentation repositories.

Code assistants are becoming more advanced, offering real-time code generation, automated bug detection, and performance optimization. Cursor, an AI-driven code editor, soared in popularity, securing $60 million in funding in 2024. It streamlines tasks like creating database schemas and generating user interfaces. An A-B test showed those using GitHub Copilot completed tasks 55% faster than those without, saving on average 90 minutes per task. LLM performance is improving with better reasoning and multimodal capabilities for processing text and images. Yet accuracy remains a concern, with hallucinations still a feature rather than a bug of models. Developers in our annual survey said that 38% of responses from AI assistants are inaccurate at least half the time, resulting in additional validation and busywork that undermines AI productivity goals. LLMs still struggle with context, complexity, and obscurity to deliver accurate code.

Where next: Narrow AI tools to agentic AI

While general-purpose LLMs like GPT-4 address a wide range of needs, niche AI tools—often built on OpenAI APIs—offer specialized functionality. Open-source platforms like Hugging Face have surpassed 250,000 pre-trained models, supporting developers to create custom solutions. IT decision-makers must review broader company licenses against requests for “narrow AI”—AI tools to complete specific tasks—and consider how to balance opportunities with security concerns. Agentic AI represents the next stage of AI development. Agentic systems will autonomously plan, reason, and execute tasks across complex workflows without direct human input. Potential applications include diverse use cases from autonomous software management to advanced robotics.

Responsible AI in the spotlight

This year, conversations about the existential risks to humanity of artificial general intelligence (AGI) have refocused to address pragmatic issues like vulnerabilities, bias, and misuse, prompting calls for stronger safeguards.

The EU AI Act, enforced in August 2024, impacts organizations whose AI system outputs can be accessed by EU citizens. The Act mandates developers to prioritize responsible AI and robust data management practices. It bans real-time biometric identification in public spaces and manipulative advertising techniques. High-risk applications in law enforcement and recruitment must meet strict protocols to ensure fairness, equity, and transparency. In the US, state-led efforts have shaped preliminary frameworks, though the new presidency in 2025 could stall outgoing President Biden's proposed Blueprint for an AI Bill of Rights.

AI skills gaps could hold back growth

The rapid pace of AI development has created significant skills gaps across industries. AI skills have a “half-life,” meaning they become outdated twice as fast as other skills. To keep pace with this fast-evolving technology, developers and other technologists need to invest in ongoing learning. Professor Ethan Mollick of the Wharton School suggests spending about 10 hours exploring each new AI system, starting with tasks familiar to you and gradually expanding until the “jagged edge” of the system’s limits becomes clear.

To an experienced developer’s eye, errors in AI-generated code are easier to spot and test. For early career developers, the limitations of AI system outputs, which often appear plausible at first look, can be more challenging to identify and correct.

Prompt engineering has evolved from a novel skill to a critical competency. Effective prompting remains crucial for technical and creative briefs. For simpler tasks, LLMs are becoming more intuitive, requiring less user input to understand queries and making AI more accessible to non-technical users.

Where AI is heading for developers in 2025

AI in 2024 transformed from an evolving technology to an essential tool for ambitious organizations. If, as experts like Yale University’s Luciano Floridi predict, the hype cycle follows the path of other general-purpose technologies like the internet, 2025 could be the year AI reaches its “Plateau of Productivity” when mainstream adoption takes off. Developers must continue to test tools and establish the right use cases to integrate AI into their workflows and integrate responsible AI principles into the development of new systems.