Synthetic data

What is synthetic data?

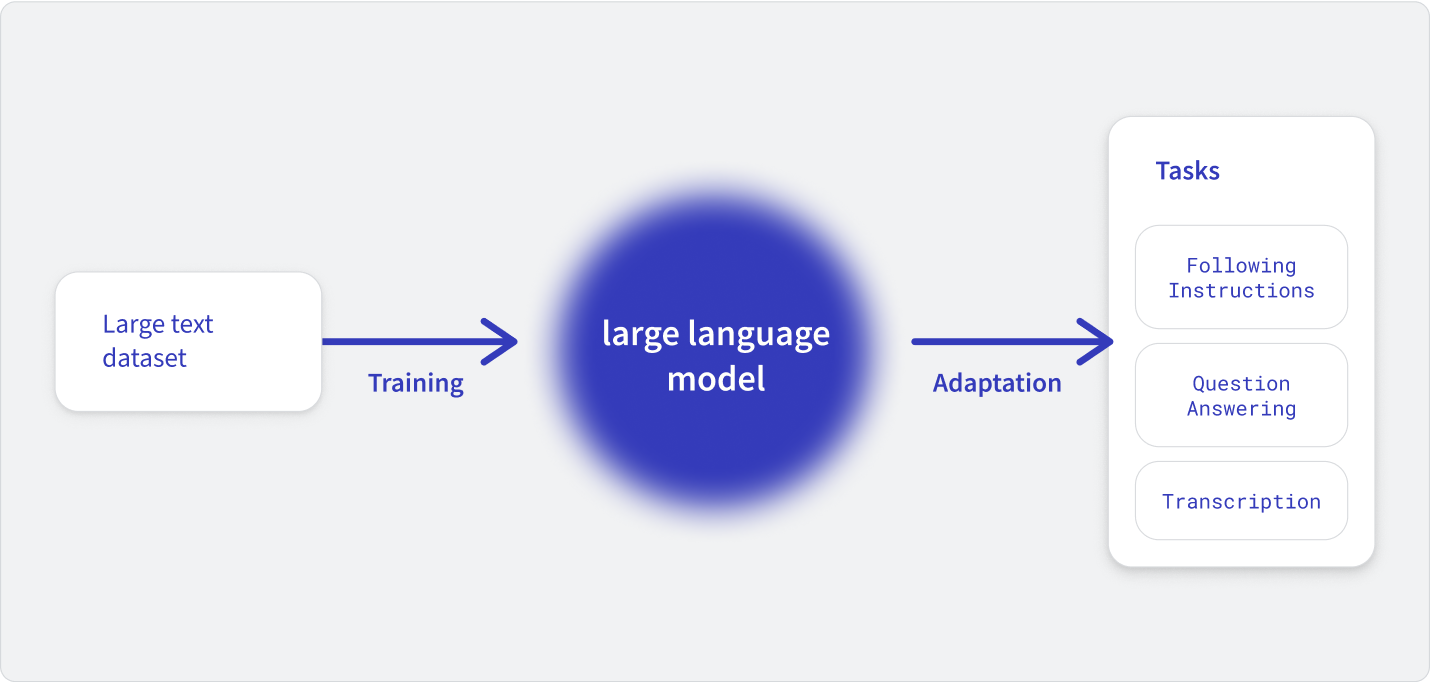

With machine learning, especially the large language models and other models currently in vogue with GenAI, getting good outputs means training those models on a lot of data—terabytes of text for even the smallest current models. A paper by researchers at Google Deepmind found the optimal number of tokens—a fraction of text—per parameter is around 15, though most of the top models are using 1000 to 2000 tokens per parameter. GPT-4 has over 1000 parameters and was trained on 1000 terabytes of data. Newer models have more parameters trained on more data.

Further improvements to these LLMs means more data, whether that is by training for more parameters or overtraining each parameter. The creators of this data—humans—saw AI’s insatiable hunger for our work and pushed back. We had worked hard on it and didn’t appreciate being fodder for someone else’s product and asked for recognition of their contribution (if not payment). And now many LLM companies have begun crediting the people who created their content.

There’s a hard limit on how much data is available for training. Even if you’re compensating all the copyright holders (or willing to risk their wrath), the amount of data available on the internet and the world is finite. Researchers estimate that model trainers will run out of human-created data between 2026 and 2032. At that point, LLM trainers will need to accept this ceiling or find other avenues for training data.

One that has shown some promise is synthetic data. This is data that is created by a machine process, whether that’s an LLM or a computer simulation. For machine learning processes hungry for data, synthetic data can provide. It has secondary uses, too, as a source of focused data or a privacy screen.

More training data

If what models need is more data, then computers can do that. By using existing machine learning models to generate training data, you can train up a model on the cheap using the results of other training processes. Combined with human-generated data, this can allow you to create larger models based on better-formatted data.

For some models, synthetic data may be the only way to get complete sets. For use cases like autonomous driving, you can build models with more complete training data by using synthetic data generated by simulations.

“It’s not possible to acquire training data that represents every possible driving scenario that could occur. In this case, synthetic data is a useful method to introduce the system to as many different situations as possible.”Kalyan Veeramachaneni, principal research scientist at MIT and co-founder of DataCebo

Recently, the DeepSeek R1 model showed the power of good synthetic data and targeted training sets. Reports claim that it used OpenAI to produce responses to train its model in a process known as distilling. While the licensing issues are currently in question here, relying on the data produced by another LLM can certainly lower the costs of model training.

There is a danger with synthetic data as the primary training source: model collapse. This is when the repeated hallucinations, biases, and errors produced by any model amplify when used to train other models. The outliers in the original statistical model are lost, and the new model uses a narrower statistical distribution. While that would likely remove some of the more comical AI fails, it would also remove the full breadth of understanding.

One of the current dangers around AI is the amount of AI-generated content now on the internet. GenAI has been used to quickly create SEO-friendly primers for every organization trying to rank for a given keyword. Anyone training on a full crawl of the internet is going to be gathering up this content and putting themselves at risk for model collapse.

Optimized training data

While a general purpose model trained on synthetic data is at risk of model collapse, some model trainers have used synthetic data as a focused training set to get better-than-average results out of small models. A group from Microsoft trained their phi-1 model on a synthetic Python textbook and exercises with answers. They created the textbook by prompting GPT 3.5 to create topics that would promote reasoning and algorithmic skills. The final model has 1.5B parameters and matches the performance of models with 10x the number of parameters.

Focused data, even when produced by another LLM, can train a model to punch above its weight for a fraction of the cost.

“We conjecture that language models would benefit from a training set that has the same qualities as a good ‘textbook’: it should be clear, self-contained, instructive, and balanced.”

Smaller, targeted LLMs not only provide more bang for their buck from training costs, but they are also cheaper to run inference and fine-tuning on. If you want resource and cost efficiency and don’t need the creativity and comprehensiveness of a massive model, you might do better by selecting an LLM with fewer parameters.

Privacy

Another common use for synthetic data is to protect privacy. In the course of gathering data, whether about customers or their usage of an application, you may want to analyze it or share it with vendors. But that could expose your customers’ PII and leave them vulnerable for exploitation. Synthetic data creates a statistically similar data set that doesn’t have the same risks of PII leakage.

On the Stack Overflow Podcast, John Myers, CTO and cofounder of Gretel, told us how this works: “What our synthetic data capability does is build a machine learning model on the original data, at which point you can throw out the original data. And then you can use that model to create records that look and feel like the original records. We have a bunch of post-processing that removes outliers or overly similar records, what we call privacy filtering.”

This privacy-filtered data can then be used instead of the original data while maintaining the general shape of that data. You can run analytics on it, train other models, or use it in demos. “Synthetic data needs to meet certain criteria to be reliable and effective—for example, preserving column shapes, category coverage, and correlations,” said Veeramachaneni, the MIT research scientist. “To enable this, the processes used to generate the data can be controlled by specifying particular statistical distributions for columns, model architectures and data transformation methods.”

This statistically similar data can then be used to train other models to produce responses that have no chance of leaking any sort of private or personally-identifiable information. These models can make accurate predictions on production data without giving away sensitive information to your contractors. And they aren’t vulnerable to model inference attacks or re-identification attacks.

For model trainers looking for more data, targeted data, or depersonalized data, synthetic can be even better than the real thing. It can add to existing models and push a model to perform in desired ways. However, if all you’re using is synthetic data, then you are at risk of model collapse.