What’s on the market?

As you’re getting your GenAI program up and running or building to the next level, you’ll be interested in what’s already available on the market. Since ChatGPT captured the public imagination when it was released in November 2022, researchers, open-source developers, and companies have been working to create models, techniques, and software that take advantage of the possibilities offered by these new ideas.

For anyone integrating GenAI in their software, this is a gold mine of opportunity. You can get a huge headstart on integrating GenAI features by building on what others have already created. Here’s a quick overview of the landscape.

Foundation models

There are several types of foundation models that you’ll be interested in:

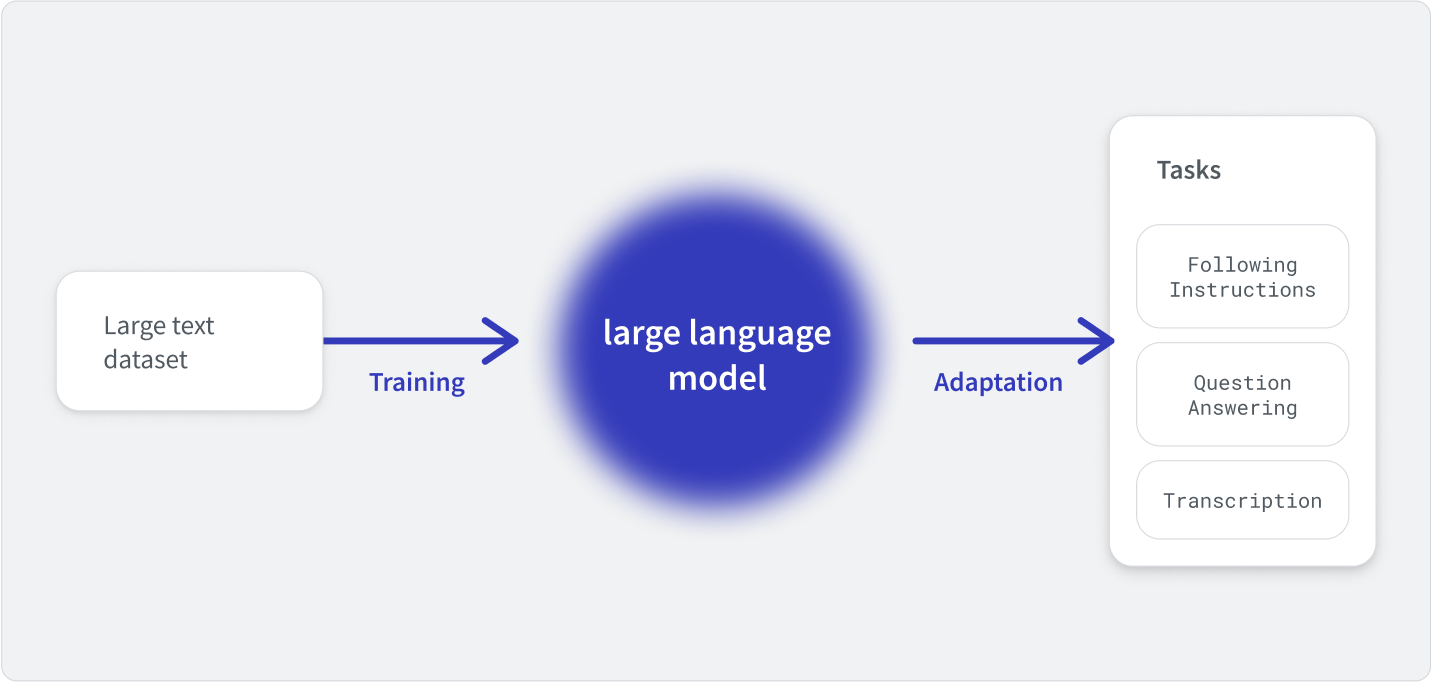

Large language models (LLMs)

These use a massive dataset of text to provide general-purpose language generation and understanding. This category includes OpenAI's GPT models (GPT-4o and o1), Google's Gemini, Meta's LLaMa 3, and Anthropic's Claude 3. This category is getting more crowded and more advanced as companies like Amazon, Nvidia, Databricks, DeepSeek, IBM, and Alibaba Cloud have released major general LLMs.

Images

A number of models and services can generate and understand images. OpenAI's DALL-E 3, Google Brain's Imagen, StabilityAI's Stable Diffusion, and Midjourney are major players, though not all of them allow programmatic access at this time. A number of specialized image generators have arisen—Ideogram for accurate text, Adobe Firefly for integrating generative AI with photos, and Generative AI by Getty for commercially-safe images.

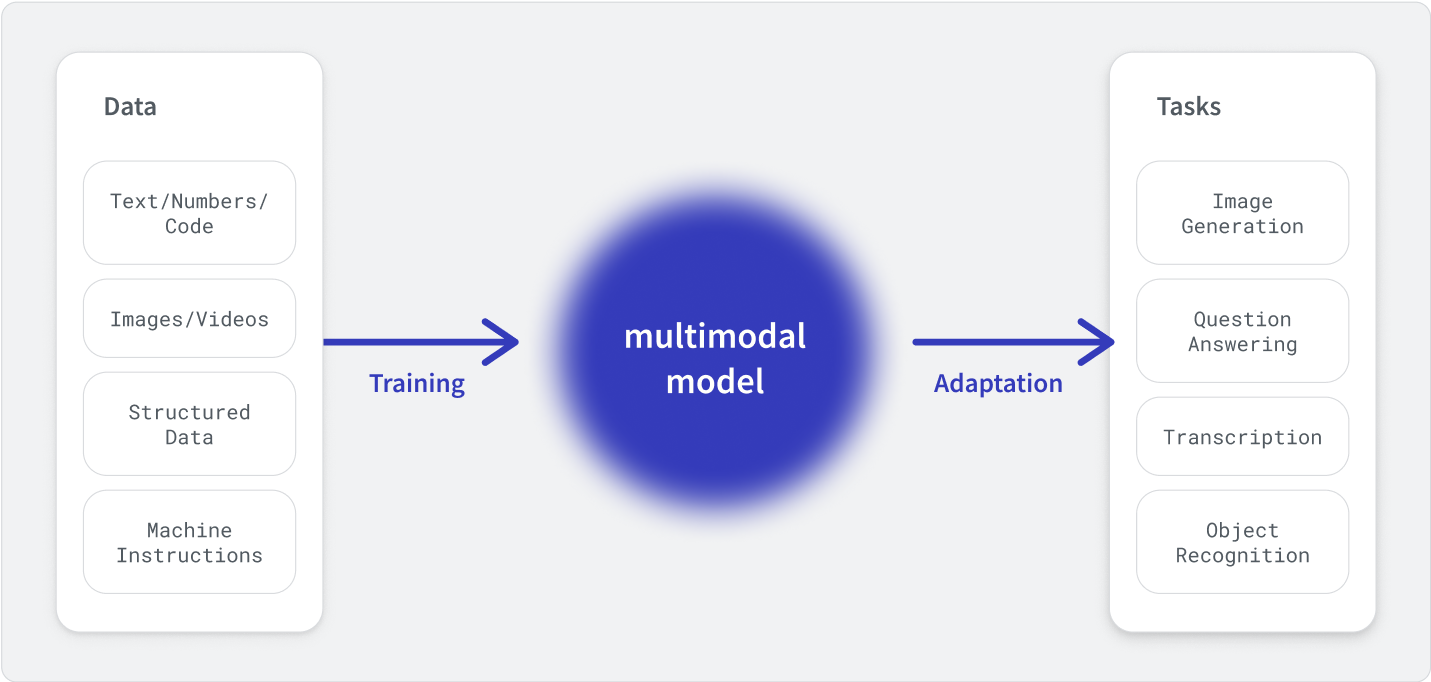

Multimodal

These models can reason and generate across multiple areas, including text, image, sound, and more. While some of the image-based models can perform image-to-text and text-to-image generation, general-purpose multimodal AI includes ChatGPT and Gemini, which may seamlessly link multiple individual models together.

If you’re integrating one or more of these models into your applications, you’ll have to consider how you access them and how much you can modify them with fine-tuning. You can access some via APIs, some can be installed locally, and some can be bundled with your cloud services. Some are open-source and allow you to fine-tune parameters, install locally, and modify as you see fit.

Data platforms

AI runs on data, so you’ll need somewhere to store that data. While a model comes pre-trained on a massive amount of data, you’ll still need to store data for fine-tuning and retrieval-augmented generation, as well as the usual monitoring and analytics usages. These usually fall into two categories: vector databases and data lakehouses.

“For databases that currently lack vector search functionality, it is only a matter of time before they implement these features.”

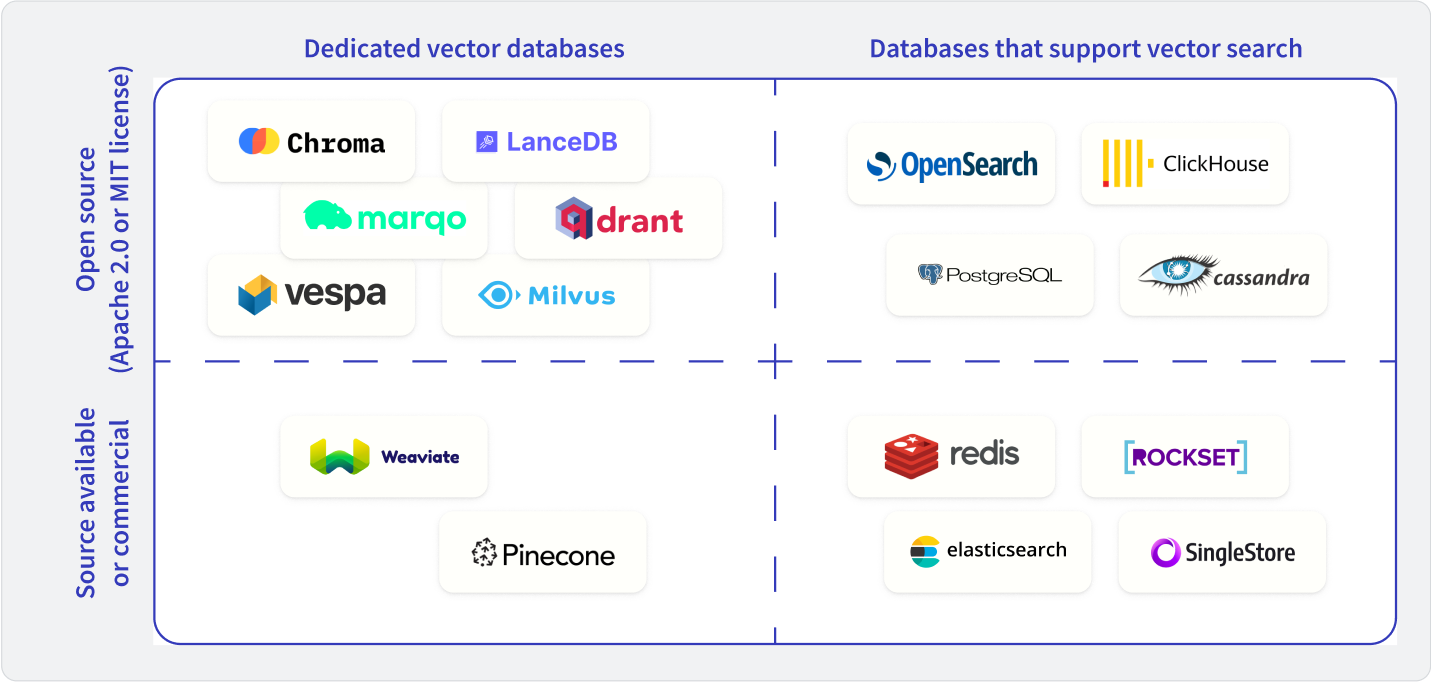

Vector databases

These store vectorized data that your AI solutions will pull from for search, chat, or RAG solutions. Major providers include Pinecone, Weaviate, Chroma, Qdrant, Milvus, and more. Plenty of existing databases have added vector storage and/or search, like MongoDB, PostgreSQL, ElasticSearch, Rockset, Redis, and many more. You can download and host many of these solutions yourself or turn to the provider for a fully-managed database solution.

Data lakehouses

These are large stores of mostly unstructured data (files, objects, etc.) that can be drawn from quickly, so they balance scalability, latency, and cost. Major providers include DataBricks, Snowflake, Google Big Query, Cloudera, Amazon RedShift, and Teradata Vantage, but there are many more. For these, you’ll also have to consider your infrastructure—a significant number of these provide hosting services, as the data requirements can balloon quickly. You can build your own solution here, but it can often mean connecting a number of data storage solutions together.

All-in-one solutions

Putting together a GenAI platform can be a pretty daunting task, and evaluating all the pieces is a significant project as well. Two distinguished engineers from IBM discussed how they put together their business-focused GenAI platform, watsonx, and the effort is monumental. When faced with that task, you may want to consider all-in-one solutions.

Many cloud providers, including AWS, Google Cloud, and Microsoft Azure, have set up all-in-one solutions that can be added as part of their services. If you’re already using one of these providers, the benefit is that you get infrastructure, models, training, and data platforms without having to assemble a tech stack on your own. The downside is increased risk of vendor lock-in and reduced flexibility, as the tools available will be subject to what the provider supports.

Other providers of all-in-one solutions include the aforementioned IBM and Nvidia.

The ecosystem of GenAI tools and technologies is already vast and growing rapidly. Major players have been consolidating their positions through acquisitions: Databricks bought MosaicML, Nvidia bought Run:ai, and OpenAI bought Rockset, just to name a few. Organizations looking to make their mark in the GenAI era will have to either use existing offerings or provide a unique value proposition.