Build vs. buy

Do you want to be an AI company, or do you want to be a company that uses AI in its products?

Where to begin

With any complex new technology, your organization needs to determine whether to build or buy your tools. Engineers build software, so it’s natural for them to want to build something when they need to solve new engineering problems. However, for those planning how an engineering team uses their time, building new solutions to solved problems isn’t always the best use of time. There’s a third option that some call “borrow,” where you use open-source software but with the option of contributing code or forking the repo. This isn’t an all-or-nothing-proposition; you can buy or borrow for some aspects, build others, or customize the solutions that you adopt.

So when you’re embarking on your GenAI journey (or pivoting, for that matter), it’s important to ask: Do you want to be an AI company, or do you want to be a company that uses AI in your products? It’s an important distinction. If you have a specialized use case or want to control the code for your dependencies, then perhaps building makes sense. For most orgs and use cases, buying a solution or using open-source software will work. As always, an organization will thrive when it focuses on building software that directly affects its business.

Let’s suppose you want to build an AI stack - Here’s what that would take.

Build-a-bot

Building a foundation model is a massive and expensive undertaking. OpenAI spent around $100 million training their GPT-4 model, rumored to be one trillion parameters. Their newest o1 and o3 models, with their thinking capabilities likely pulling in additional data for individual prompts, likely cost more to train and more to run. OpenAI CEO Sam Altman has said that their pricey $200 Pro subscription loses money for the company. Bigger isn’t always better: researchers found that targeted training data sets let models overperform in specialized tasks. On our podcast in January 2025, Inbal Shani, Chief Product Officer and Head of R&D at Twilio, stressed the enormous importance of data quality in achieving high-quality responses from AI. “The data is the key,” he said. “If you don't have the right data, then whatever AI you are going to apply on top of that is not useful.”

But you don’t have to run out and buy up GPUs to train your own model. Some companies offer model training as a service. The trade-off here is the quality and reliability of the big models for the control and security of a custom one. Additionally, the bigger your model is, the more it costs to host.

But as the LLM space becomes more mature, the models become more commodified, and buying a service becomes more attractive overall. The years of work that these big companies have put into these models are very hard to duplicate at this point. For a monthly or per token fee, you can gain the benefit of all this work for your own product.

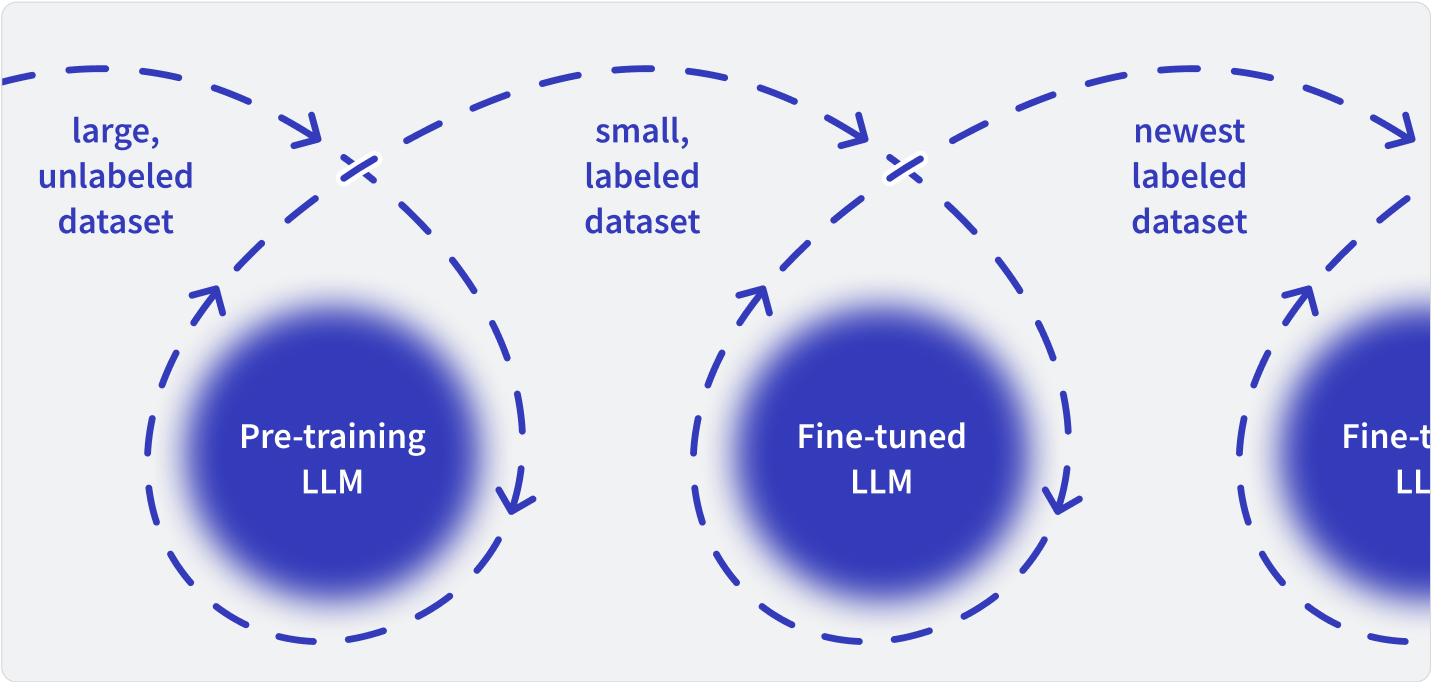

You could take the “borrow” route and fine-tune an open-source model. This would still require paying for GPU time, but it would allow you to get the benefits of the big LLMs while customizing it on your data. You can use techniques like LoRA to efficiently customize model weights without having to change everything within the entire LLM.

It’s unlikely that you’d want to build your own database or machine learning framework like PyTorch. Many of the standard tools in these areas are open-source and recreating the wheel here is usually more trouble than it’s worth.

Here are a few areas where you may find yourself building your own specialized tooling.

Orchestration and agent frameworks

Much of what software does is automate the repetitive stuff, so anything that connects LLM output to other processes falls in this category—think LlamaIndex, AutoGPT, or AutoChain. These provide easy connections between processes that AI agents need to function. Some developers, especially those deeper into their GenAI journey, may find it easier to skip the frameworks and either call database and LLM libraries directly or build their own agentic frameworks.

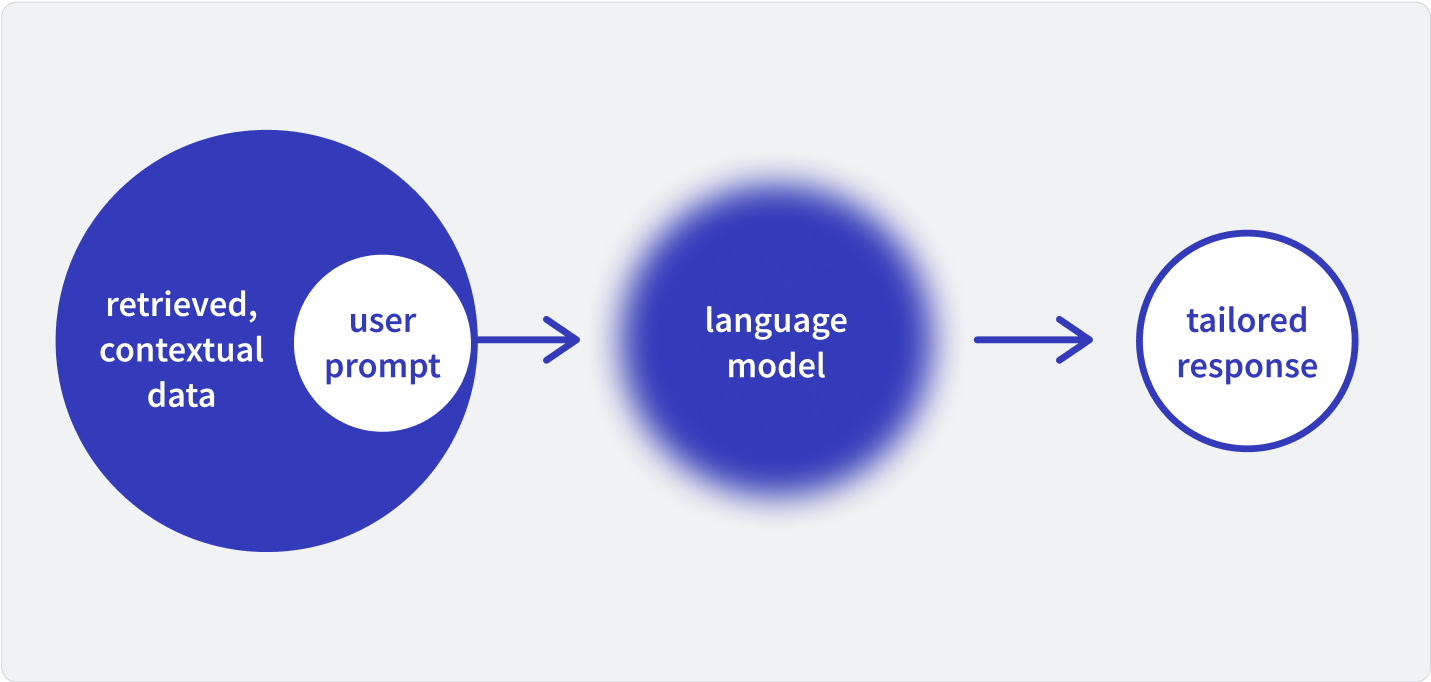

Retrieval-augmented generation

RAG systems supplement LLM output with relevant context in the prompts using vector databases and orchestration tools. You can build these in-house, but there is a flood of new tools and frameworks that will help with this process. Some data platforms will provide easy RAG setup if you host your data with them.

Fine-tuning and inference

Fine-tuning lets you update an LLM with new information and change the model’s weights and biases. Most applications want this sort of feedback mechanism, and there are a lot of different approaches and a lot of different implementations. This is an area where—even if you want to build your own—you’d want to check the open-source options first.

Inference is a growing concern as you may not just run inference on prompt tokens but on additional data pulled in to respond to specific prompts. If you’re running your own reasoning or chain-of-though process, you’ll need to consider your capacity for real-time, always-on inference.

Monitoring, explainability, and debiasing

There’s a whole slew of tools and techniques for evaluating, tracking, and hacking how generative models produce results. If you have an excellent data science team, you may end up coming up with novel solutions. But with all the visibility around hallucinations and GenAI’s failings, there is a lot of active research and development in this area. You may also want to consider the legal ramifications of DIY solutions here. Some companies, like IBM, will indemnify their clients against legal exposure caused by their products’ responses, but that’s certainly not universal.

Summary

Knowing what you need and how to judge what tools will do the job can be a challenge in itself. For our own AI efforts, we set up a separate Community within our internal Stack Overflow for Teams instance and gathered AI-related knowledge there. You’ll need some way to keep track of how fast the field is moving and what new developments will affect your business goals.