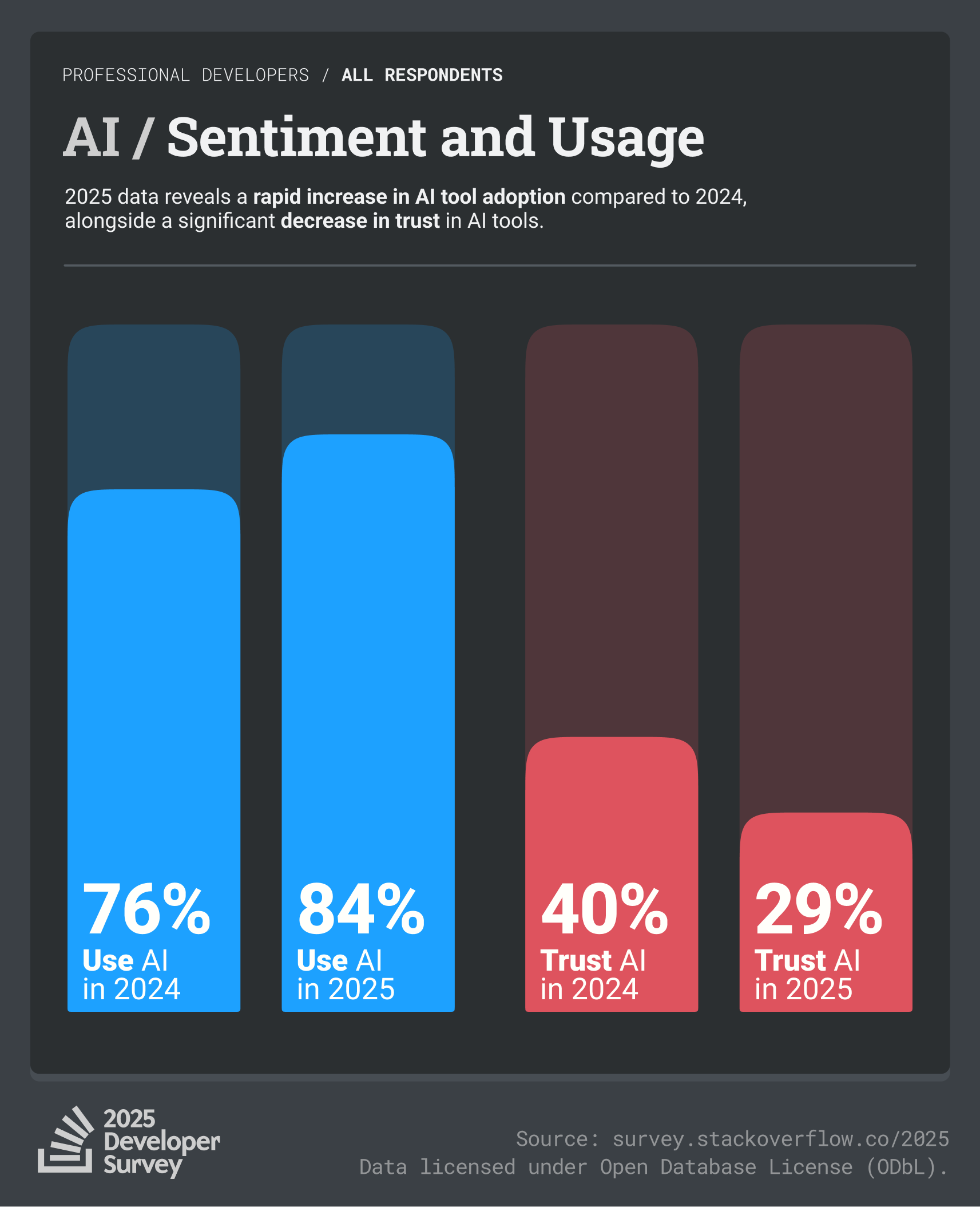

Learning #1. AI adoption is accelerating, but trust is declining

Our survey shows that 84% of developers use or plan to use AI tools, up from 76% last year. Yet developer trust in those tools is falling fast: only 29% of respondents this year say they trust AI outputs to be accurate, down from 40% in 2024.

This adoption-trust gap creates a huge risk for companies betting heavily on AI. While developers see value in speed and automation, they are skeptical of the quality and reliability of AI-generated results. Developers simply don’t trust AI because they know the answers it provides are often inaccurate. According to our survey, more developers actively distrust the accuracy of AI tools (46%) than trust it (33%), while only 3% report that they "highly trust" the output.

TL;DR for leaders

Your developers are going to continue to use AI tools in their workflows, so you need to ensure they can trust the output. Trusted, human-curated knowledge is fundamental to fuel and guide these tools. Smart business leaders are investing

in a centralized knowledge base, built and verified by their teams, that can power AI systems. By pairing automation with trusted human oversight, you can:

- Ensure accuracy

- Reduce rework, and

- Build long-term confidence in the tools your team uses

AI tools alone won’t deliver sustainable value. Companies that prioritize validating the knowledge AI tools use will win in the long run.